Our Stance on AI

Hireguide AI Explainability Statement

This document explains when, how, and why Hireguide uses Artificial Intelligence in its hiring platform, specifically focusing on our AI Screener product, and how we design the system to support transparency, promote fair treatment, and keep human decision-makers in control. It is intended for external AI ethics and compliance review committees in the U.S.and abroad. We detail our AI Screener’s design, legal compliance measures, and safeguards (bias mitigation, oversight, privacy) to demonstrate Hireguide’s commitment to ethical AI use in recruitment.

Introduction: Why We Use AI in Hiring

Hireguide’s AI-driven interviewing tool is designed to support – not replace – human recruiters in finding the right candidates efficiently and consistently. We leverage AI in our hiring platform for several key reasons:

Consistency and Fair Treatment

Every candidate faces the same predefined questions and is evaluated against the same job-related criteria. Human interviews can be inconsistent; when configured with the same questions and rubric, our system is designed to apply those standards consistently across candidates. This standardized approach can reduce some sources of variability in initial screenings and support more comparable evaluations.

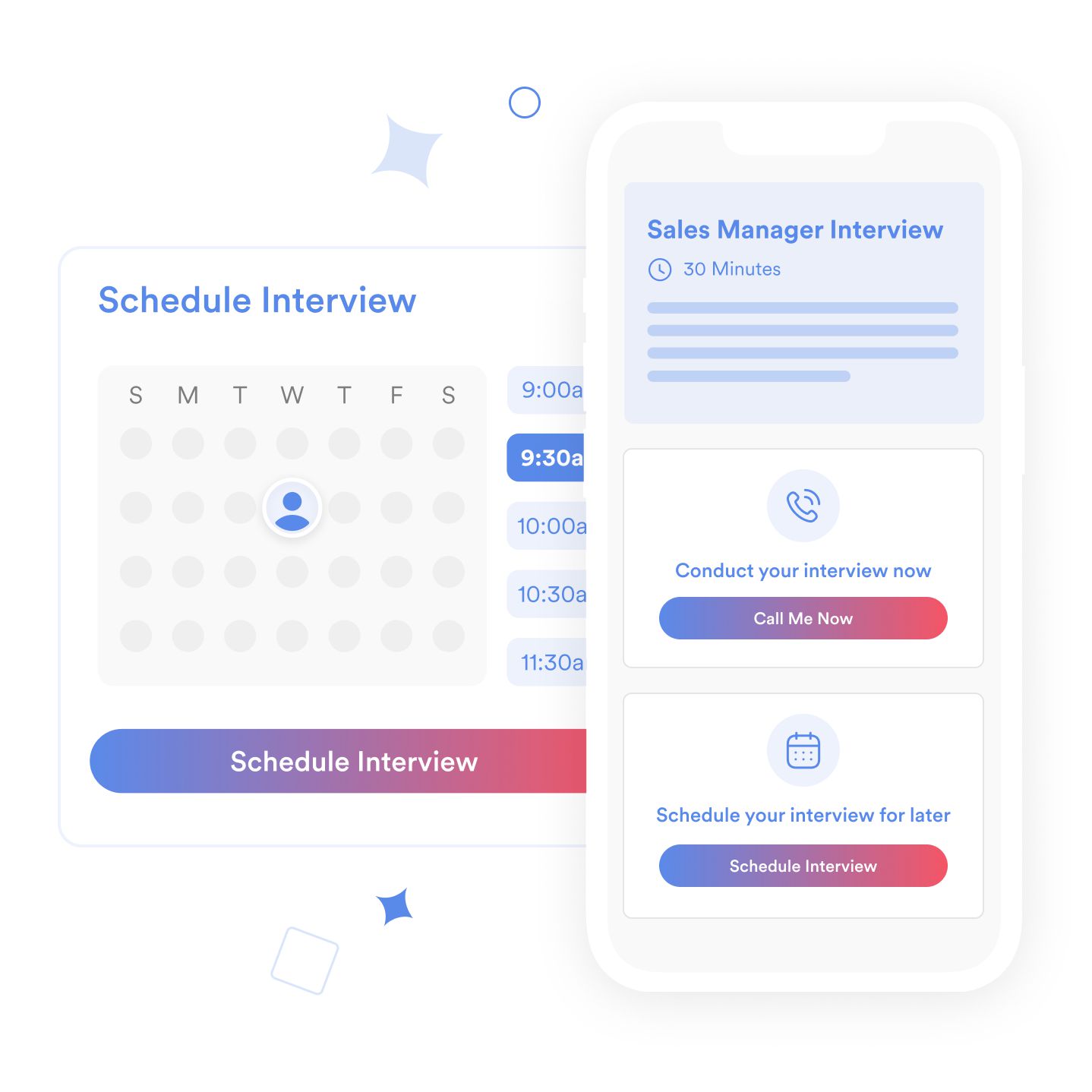

Efficiency and Scale

AI enables recruiters to handle high volumes of applicants quickly. Routine screening that might take a human team many hours can be completed faster by AI. This reduces time-to-hire and frees up human interviewers to focus on in-depth assessments of top candidates. The AI can conduct structured interviews 24/7, allowing candidates to interview at their convenience and organizations to respond swiftly.

Enhanced Candidate Experience

Candidates can complete interviews on their own schedule, without having to coordinate times or travel. They all receive the same structured opportunity to showcase their skills. The AI Screener maintains a friendly, professional tone and helps ensure important follow-up prompts are asked consistently. By providing analysis to the hiring team, our platform can also help recruiters update candidates on next steps more quickly. Overall, theprocess is designed to be convenient and consistent, improving the candidate’s experience.

Data-Driven Insights

Our AI provides a rich analysis of each candidate’s responses. It can highlight specific strengths or areas of concern based on the criteria defined for the job. Over time, aggregated and de-identified data may help identify patterns that correlate with downstream outcomes, where clients have appropriate outcome data, permissions, and governance in place. Such analytics are hard to derive from traditional interviews but are made possible at scale with AI.These data-driven insights help recruiters make more informed decisions.

Importantly, Hireguide uses AI only to assist human decision-makers – it does not make hiring decisions on its own.The AI’s role is to conduct structured interviews and provide recommendations with explanations, so that human recruiters can make faster, well-informed hiring decisions.

In the following sections, we explain how the AI system is designed, how it evaluates candidates, and what safeguards and oversight we have in place to help ensure this technology is used responsibly and in alignment with relevant legal and ethical expectations.

System Design: Transparency, Control, and Reliability

Our AI system was built following a “glass box” philosophy– we aim for outcomes that are explainable and traceable to clear, job-related factors, rather than coming from an opaque “black box” algorithm. We embed transparency and user control into the foundation of the system. Key design features include:

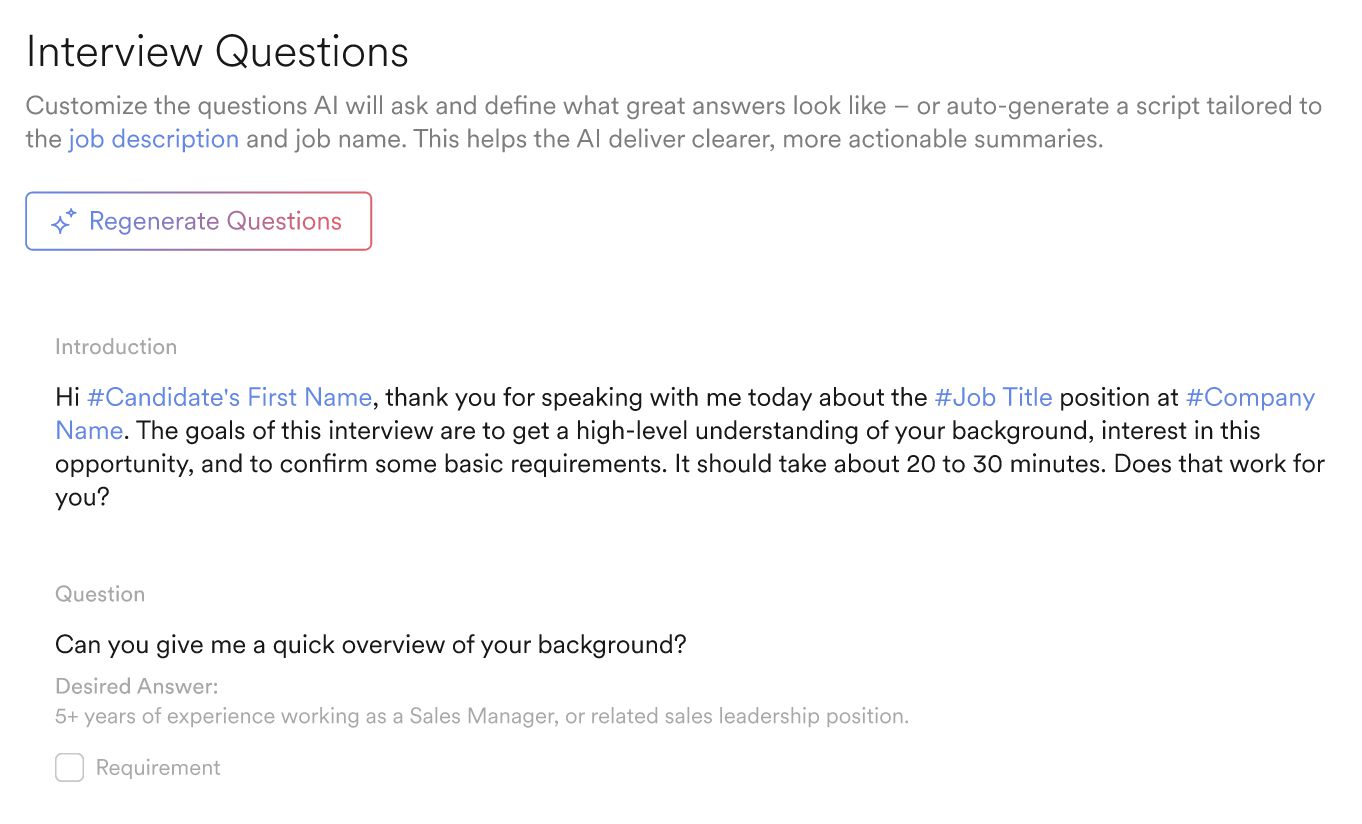

User-Controlled Interview Content:

Recruiters have full control over the interview questions used by the AI. The hiring team defines the questions for each role (either choosing from our library or writing their own) and determines any follow-up prompts or knockout questions.

The AI Screener is configured to ask the questions that the user has selected and approved, plus simple probing prompts to elaborate on an answer; it is designed not to introduce new topics outside the approved interview content. This helps ensure every interview stays focused on job-relevant topics. By letting humans curate the questions, we keep people in charge of what information is gathered from candidates and help ensure the process aligns with the organization’s needs.

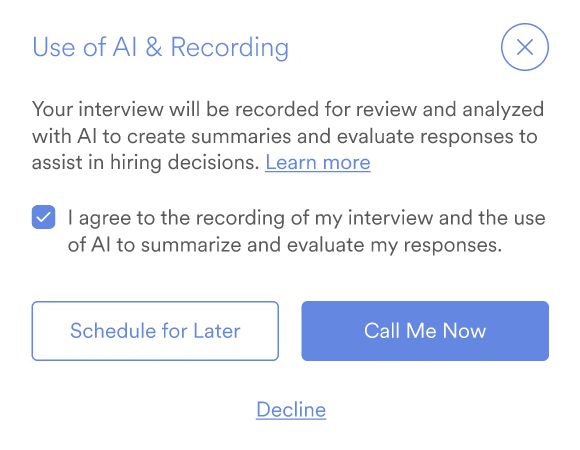

Built-in Candidate Notice & Consent (“Consent byDesign”):

We have a clear consent flow for any AI-driven interview.Before an AI interview begins, the candidate is explicitly informed that:

– The AI interview will be recorded (audio) for review.

– An AI will analyze their responses to the predefined questions.

– How the AI will use their data (e.g. transcribing answers and evaluating them against job-related criteria). – Why the AI is being used (to assist the hiring team with a consistent, efficient initial evaluation).

– Assurance that no hiring decision will be made solely by the AI – human recruiters will review all AI results. – Their options: the candidate can consent and proceed, or opt out. If they do not consent, they will be offered an alternative process (such as a human-conducted interview or other accommodation, per the hiring company’s policy) and provided the recruiters contact information.

The candidate must give affirmative consent (e.g. click “I agree”) before any AI engagement occurs. If they decline, the AI interview does not proceed. We never force or“auto-enroll” candidates into AI assessments without agreement. This proactive consent process is designed to support compliance with applicable U.S. federal and state requirements, recognizing that obligations can vary by jurisdiction and employer workflow. It also aligns with the spirit of AI-specific accords by informing candidates about the AI tool and providing an alternative upon request. All information is presented in plain, non-technical language to help ensure candidates understand and are comfortable with the process. Notably, this approach is designed to align with common international data protection expectations (e.g., notice, choice, and purpose limitation),and we work with clients to configure workflows consistent with their applicable obligations.

Multi-Persona AI Evaluation:

Hireguide’s AI doesn’t rely on a single algorithmic opinion. Instead, for each answer a candidate gives, our system uses multiple AI models(“personas”) acting like independent reviewers. Each AI persona analyzes the response from a slightly different perspective or method. We compare outputs across multiple AI evaluations to check for consistency; material disagreements or low-confidence outputs can be flagged for additional human review rather than presenting a potentially unreliable result as final. This “team of AIs" approach adds redundancy and can help reduce the likelihood of single-model errors or AI hallucinations (i.e. anAI model producing an analysis not grounded in the candidate’s actual words), but it does not eliminate the possibility of mistakes. In practice, this means the AI’s feedback is designed to be more robust before it ever reaches a human decision-maker.

Focus on Job-Relevant Content Only:

Our AI is deliberately narrow in what it evaluates – only the content of the candidate’s answers relative to the job criteria. It does not analyze a candidate’s personal characteristics or any information unrelated to the question. For example, no facial recognition, video scanning of appearance, or emotion detection is used. We do not use automated facial recognition, emotion detection, or similar biometric inference for scoring; our analysis focuses on the transcript content relative to the rubric. The system’s analysis centers strictly on the words the candidate speaks and their meaning in context of the question. This focus improves explainability – we can point to the exact words or phrases that led to a given evaluation – and it avoids introducing irrelevant or biased factors. Technically, the candidate’s spoken answers are first transcribed to text using an automated speech-to-text system; transcription quality can vary based on audio conditions, accents, and background noise, and we encourage human reviewers to reference the original audio when needed. This means everything is evaluated based on textual content (what was said) against the predefined competencies, ensuring a tight scope.

Deterministic, Rules-Based Scoring:

We design our AI’s scoring logic to be transparent and predictable. Instead of solely relying on a complex machine learning model to“score” an answer in a black-box manner, Hireguide’s approach is primarily rules-based, grounded in the criteria that the recruiter defines for each question. In other words, the rules for what counts as a good answer are set by humans (the hiring team), and the AI follows those rules.Given the same answer and the same defined criteria, the system is designed to produce consistent results, which makes the system easier to understand and audit. We douse advanced AI components to interpret the candidate’s language (for example, recognizing that mentioning“resolving a team conflict” is evidence of teamwork), but these components operate within boundaries set by the explicit scoring rules. The final evaluation of an answer is essentially a check against the user-defined success criteria. This blend – AI for understanding language, and rule-based logic for scoring – provides the best of both worlds: the flexibility to understand nuanced human speech, and a clear, step-by-step decision framework that anyone can review.

Each of these design choices was made to support clarity, fairness, and user empowerment. Users control the content; candidates give informed consent; multiple models double-check the AI’s work; and transparent rules govern scoring.Explainability isn’t an afterthought for us – it’s built into the system’s foundation from the ground up.

How Candidates Are Evaluated and Scored

A core aspect of explainability is understanding how the AI evaluates answers and derives its recommendations. Hireguide’s scoring process is designed to be transparent and based on predefined criteria, so both recruiters and candidates (if they request feedback) can understand why a certain outcome occurred. Here is the step-by-step process:

Recruiter Defines Evaluation Criteria:

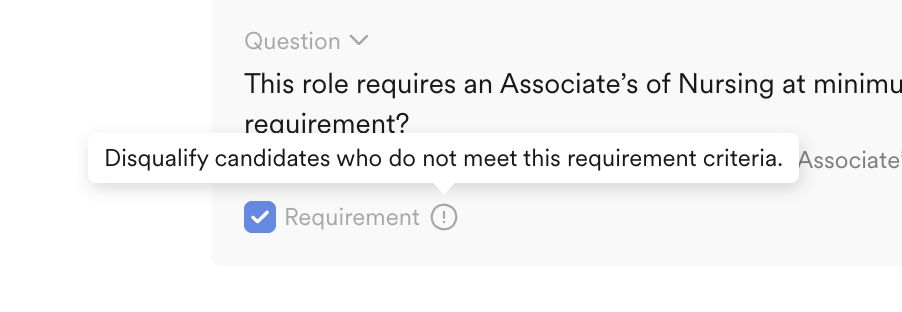

For each interview question, the recruiter or hiring team specifies what a good answer should include. This forms a scoring rubric for the AI. Typically, the recruiter will list key points or “desired response elements” that they are looking for in an ideal answer. For example, for a question like “Describe a time you resolved a team conflict,” the desired elements might be: explained the context of the conflict, took initiative to address it, demonstrated active listening to others, remained calm, mediated between team members, and achieved a positive outcome. AI can initially suggest desired responses, but the hiring team knows their requirements best, so they approve or edit these expectations in the platform. They can also mark certain elements as critical (must-have) or designate some answers as “knockout” questions. A knockout criterion might be a required certification or legal eligibility – something that results in a strong recommendation to pass/fail based on the employer’s rules. By defining criteria upfront for each question, the employer helps ensure the evaluation is job-specific, relevant, and transparent – the AI is checking the candidate’s answer against the qualities the organization has decided are important for the role. (Hireguide job description to script prompts help teams craft fair, unbiased criteria, drawing on HR best practices and EEOC guidance on job-relatedness by grounding everything in the job title and description).

AI Analyzes the Candidate’s Answer:

When the candidate answers a question (in video or text format),Hireguide’s system transcribes the response to text and theAI personas begin analyzing it. The AI compares the content of the answer to the desired elements defined instep 1. It looks for evidence in the answer that each of those key points was addressed. For instance, does the candidate’s answer mention listening to others’ viewpoints(evidence of active listening)? Did they describe a concrete outcome or result (evidence of a positive resolution)? TheAI uses natural language understanding to recognize synonyms or related concepts too – so even if a candidate uses different phrasing, the system can detect the relevant content. Conversely, if part of an answer is off-topic or irrelevant to the question, the AI will disregard those parts and focus only on the pertinent information. Multiple AI personas perform this analysis independently and their findings are cross-checked (as described in the design section above). During this stage, the AI is only using the candidate’s words and the predefined criteria – it does notincorporate any outside data about the candidate. This supports a focused, job-relevant evaluation.

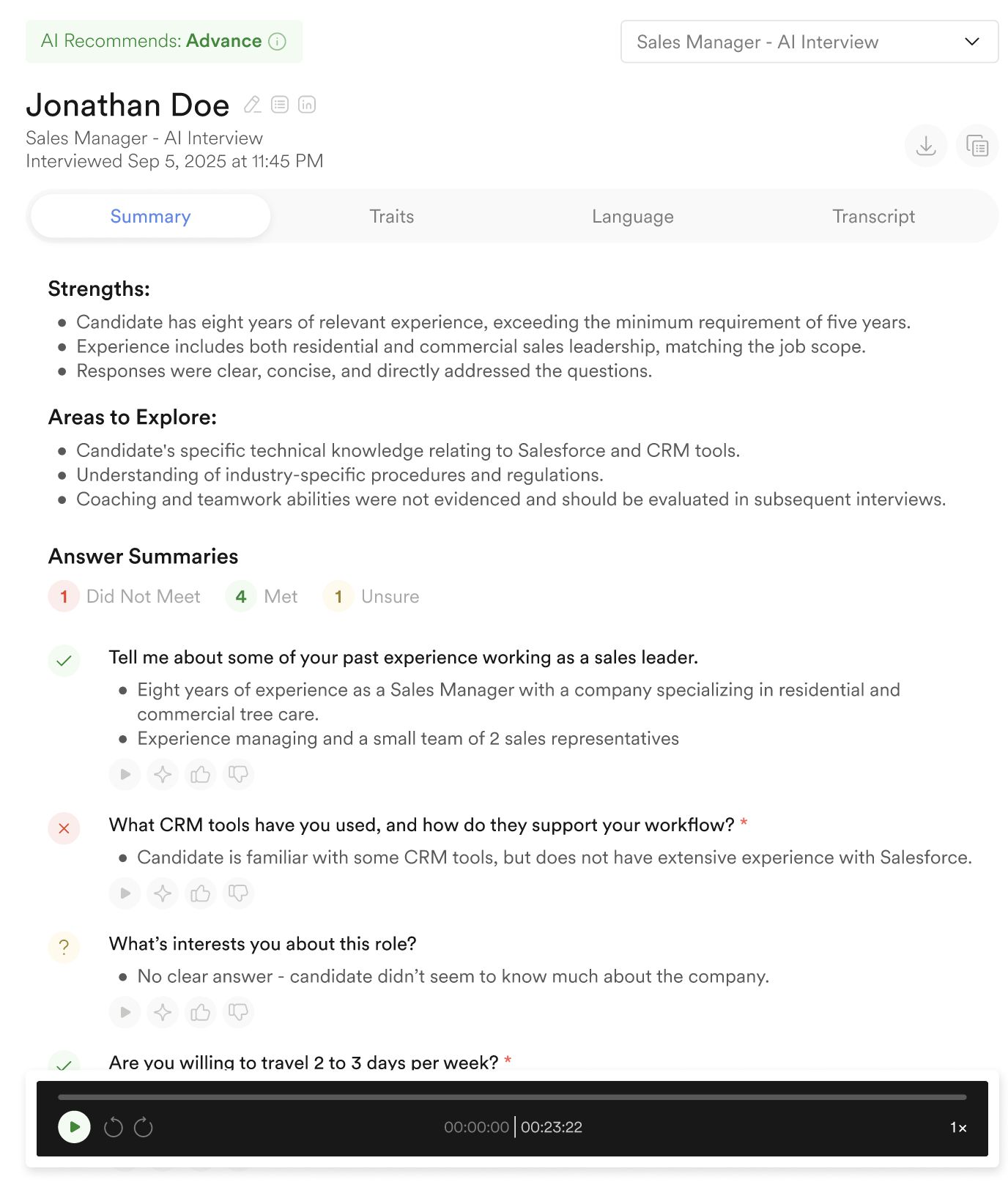

Scoring and Rating:

Based on the analysis, the AI determines to what extent the candidate’s answer meets the criteria. We typically use a simple, interpretable rating scheme for each answer:

"Meets Requirements” - the answer covered the expected points.

“Does Not Meet” - the answer does not meet the criteria.

“Unsure” - insufficient data.

These categories can be mapped to numeric scores if needed (e.g. 1 = Meets Requirements, 0 = Does Not Meet) for aggregation purposes. For example, if the role prefers Spanish fluency and the question was “Are you fluent in Spanish?”, and the candidate says “No,” that might be an automatic “Does Not Meet” for that question regardless of other answers – as defined by the employer’s rules. However in this case the candidate is not automatically rejected.

If a desired element is marked as “Required” and the response does not meet it, the system flags a knockout miss and will typically recommend not advancing; a human reviewer makes the final disposition in accordance with the employer’s process. This way, both the recruiter and candidate (if feedback is shared) can understand the outcome of each question.

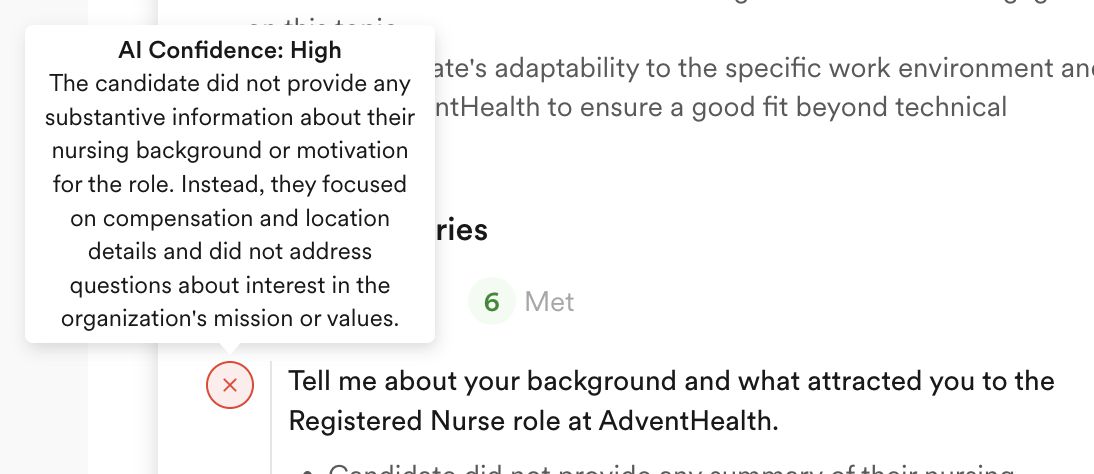

Confidence Assessment:

Alongside each evaluation, the AI provides a confidence score indicating how sure it is about its assessment. For instance, if the AI found strong, direct evidence for each criterion, it will report higher confidence. If an answer was vague or on the borderline of two categories, confidence will be lower. We include this to be transparent about uncertainty – a low-confidence rating flags to the recruiter that they should take a closer look at that particular response. By quantifying uncertainty, we avoid any false illusion that the AI is always 100% certain, and we encourage human reviewers to scrutinize unclear cases.

AI Explanation and Evidence:

Critically, every score or rating the AI gives is accompanied by an explanation of why the AI judged it that way. After analyzing an answer, the AI generates a brief summary for the recruiter that shows which criteria were met and which were not, with references to the candidate’s own words as evidence. For example, the AI’s rationale might say: “Meets Requirements– The candidate described mediating a team dispute and mentioned achieving consensus (evidence of conflict resolution and teamwork).” Or: “Does Not Meet – The answer did not mention any outcome of the project(missing the ‘positive result’ criterion).” These explanations are given in plain language for clarity. This means the recruiter (and even the candidate, if they later request feedback) can see why a certain rating was assigned. We believe this level of transparency is crucial: it helps the hiring team interpret the AI’s outputs and supports meaningful feedback grounded in the candidate’s own input. There are no hidden factors – each evaluation ties back to whether the candidate’s answer did or did not contain the predefined job-related elements.

Aggregating Results and Human Review:

After the interview, the platform compiles a structured interview report for the recruiter. This includes each question’s AI rating, the breakdown of criteria (e.g. which key points were found or missing), any flags (like a critical criterion miss or low confidence), and the AI’s written reasoning for each question. Then, a non-AI algorithm may consider the AI rating and knockout flags and provide a preliminary recommendation for the candidate, but this is advisory only. At this stage, human recruiters review the AI-generated results. They can override or adjust any evaluation if they disagree or have additional context. For instance, if a recruiter reads the transcript and feels the AI misunderstood an answer, they can change the rating or simply note their own assessment. Our system is designed so a human makes the final decision on how to proceed with each candidate, whether to invite them to the next round or not. This review process helps ensure that human judgment remains paramount, with AI as a supportive tool.

Through these steps, Hireguide’s AI provides a consistent yet nuanced evaluation of candidate responses – and it documents the reasoning behind every evaluation. If at any point a recruiter is unsure about a score, they can drill down into the explanation and watch/listen to the original response to form their own conclusion. The scoring process is thus not just automated, but designed to be auditable and interpretable at multiple levels. Stakeholders can trace a hiring recommendation back to the specific criteria and candidate statements that contributed to it, which supports internal quality assurance and external review.

Language and Communication Signals (No Test Assessments)

Beyond evaluating answers against the predefined job criteria, Hireguide’s AI Screener can also perform a deeper analysis of the candidate’s language patterns to provide additional insights. This language assessment examines how candidates communicate, not just what they say. It leverages established psycholinguistic and computational linguistics research to describe aspects of a candidate’s communication style in the interview context based on their word choices and phrasing.

Scientific Basis:

Our language analysis is informed by established research in psycholinguistics and computational linguistics. Where we reference widely used frameworks (such as LIWC, the Linguistic Inquiry and Word Count dictionary), we use them as descriptive signals about communication patterns in the interview context. Findings in the research literature can vary by setting and population, so we present these outputs as supplementary indicators to support human review—not as definitive measures of a person.

What is Measured:

The language assessment can evaluate various communication indicators exhibited in the candidate’s answers. For instance, it may measure elements such as:

- Tone and Emotional Language: Does the candidate’s language convey positivity, enthusiasm, or empathy? (e.g.use of positive words vs. negative words, indications of excitement or frustration.)

- Pronoun and Perspective Use: Does the candidate use“we” or team-oriented language versus “I” a lot, which might reflect a collaborative mindset? Do they speak in the active voice (showing ownership) or passive voice?

- Clarity and Complexity: The level of detail and clarity in explanations – are answers very concrete and specific, or abstract and high-level? This can indicate analytical thinking versus big-picture thinking. The AI looks at sentence structure complexity, vocabulary diversity, and coherence of the narrative.

- Confidence and Authenticity: Indicators of confidence or certainty in speech (for example, usage of confident phrases vs. hedging words like “maybe” or “I guess”) and authenticity markers (consistency in language, lack of excessive jargon or exaggerated claims).

All these aspects are tied to competencies that might be relevant for the role – for example, a customer service role might value empathy and clarity of communication, whereas a leadership role might value confidence and use of inclusive language.

Use of the Insights:

The output of this language analysis is presented as supplementary insights to the recruiter. It might highlight, for example, that a candidate’s answers were “highly analytical and detail-focused” or that their “communication style was very collaborative (frequent use of ‘we’) and positive in tone.” These observations can help the hiring team gain a richer picture of the candidate’s soft skills and fit for the team culture. Importantly, these language-derived insights are never used as automatic pass/fail criteria; they do not factor into the core job-related scoring or recommendations. They are there to inform and assist human decision-makers, not to make decisions by themselves.

We also apply guardrails to help ensure the language assessment remains fair and job-relevant. The AI’s analysis is restricted to traits that are pertinent to workplace performance and are evidence-based. We avoid any inferences about sensitive or protected characteristics – for example, the AI does not guess at the candidate’s age, gender, health, or other personal attributes that are not directly relevant or that would be inappropriate to assess from an interview. Any indicators derived (like measures of empathy or analytical thinking) are used with caution and transparency. They are framed in terms of how the candidate communicated in the interview context, not as absolute judgments about the person.

Another guardrail is if English language proficiency is not sufficient, we do not run the language analysis. This helps prevent non-native English speakers from potential disadvantages of language-based analysis. The feature can also be turned on or off as a company-wide setting.

In summary, our AI Screener’s language assessment provides a richer analysis of communication skills and style, harnessing well-established linguistic science. It offers explainable observations that a recruiter can interpret (often with examples from the candidate’s actual words) and use as part of a holistic evaluation. This helps ensure that qualities like communication clarity, empathy, or problem-solving approach – which are sometimes hard to quantify – can be considered in a consistent and evidence-informed way alongside the more direct question-by-question scoring.

English Language Proficiency Evaluation

In addition to assessing what candidates say and how they say it, Hireguide’s AI platform can also evaluate English language proficiency for roles where strong English communication is a key requirement. This feature is particularly useful when hiring in global contexts or for positions that demand a certain level of English fluency (for example, customer-facing roles in English, writing-intensive jobs, etc.). Our spoken language proficiency assessment uses specialized AI technology to measure the candidate’s spoken English skills in a standardized manner.

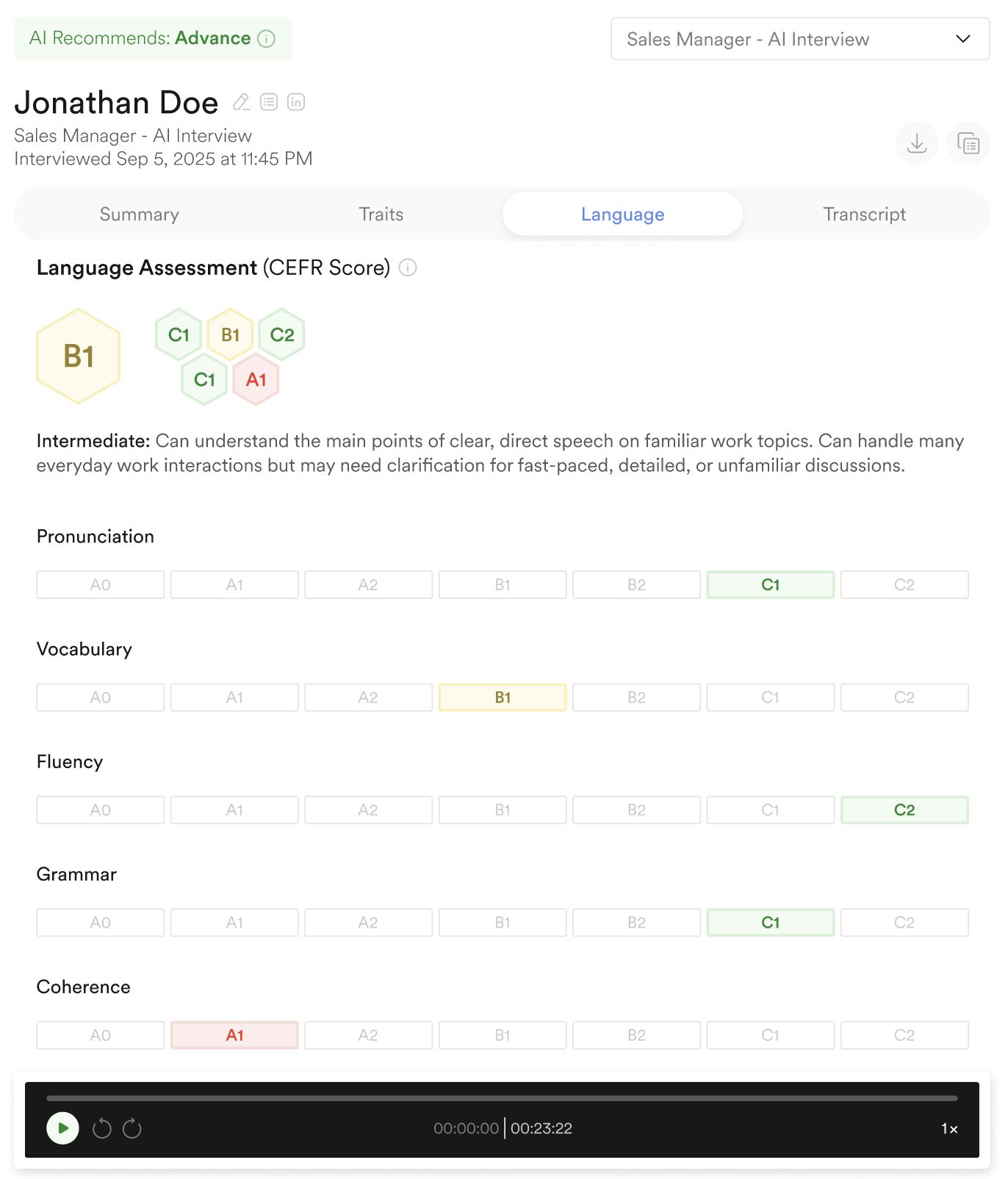

What it Measures:

The AI analyzes the candidate’s spoken responses (from video or audio interviews) on several dimensions of language proficiency:

- Pronunciation and Clarity: How clearly does the candidate pronounce English words? Are there frequent mispronunciations that could hinder understanding? The system can evaluate pronunciation patterns at a phonetic level.

- Fluency: This looks at the ease and flow of speech – for instance, the rate of speech, appropriate use of pausing, and whether the candidate speaks in complete, coherent sentences versus halting or fragmented speech. It can also consider intonation and stress patterns in speech.

- Grammar and Vocabulary: The AI evaluates the candidate’s use of English grammar (e.g. sentence structure, verb tense usage) and the richness of their vocabulary. Does the candidate use varied and appropriate words to express their ideas, or are there grammatical errors that impede clarity?

- Comprehensibility: Ultimately, the combination of the above factors contributes to an overall measure of how easily an English-speaking listener can understand the candidate. This is crucial for roles requiring effective communication in English.

Scientific Validity and Standards:

Our English proficiency feature leverages a specialized speech-evaluation engine and standardized scoring rubrics. Vendor documentation and internal testing indicate it can provide useful, consistent indicators of spoken English proficiency, though performance can vary by population, audio quality, and speaking style. Any mappings to frameworks such as CEFR or common exam scales (e.g., IELTS, TOEFL, TOEIC) are intended as approximate interpretations rather than official test results. By grounding the evaluation in standardized rubrics, we aim to make results interpretable and comparable across candidates within a given hiring context.

Fairness and Bias Mitigation:

We recognize the sensitivity around evaluating language ability, especially given the diversity of accents and dialects in English. The AI is designed not to penalize candidates for having an accent as long as they are understandable. The focus is on intelligibility and communication effectiveness, not sounding like a native speaker. Additionally, we evaluate performance across diverse speech patterns and accents and monitor for potential scoring disparities; where issues are identified, we investigate and adjust as appropriate. In short, the evaluation is about effective communication, and if a candidate can express themselves clearly and correctly, they can do well in this assessment regardless of accent or style.

Use of Results:

The English proficiency assessment provides recruiters with an objective measure of acandidate’s spoken language skills. This can be especiallyvaluable when hiring candidates from different regions orwhen a role has a minimum language requirement. Insteadof relying on subjective impressions (which might beinfluenced by the interviewer’s own familiarity with certainaccents or speech rates), the hiring team gets a consistentscore and breakdown. For example, the report might say:“Pronunciation: 85/100 (clear, minor errors on a few words);Fluency: 90/100 (speech is fluid with natural pacing);Grammar: 80/100 (minor tense errors); Overall CEFR Level:C1 (Advanced).” This level of detail helps in two ways:

Screening for Requirements: If a job requires, say, advanced English proficiency, the recruiter can quickly identify if the candidate is likely to meet that bar.

Identifying Training Needs or Accommodations: If another wise strong candidate has slightly lower language scores, the employer might still hire them but plan for some language training. Conversely, strong scores can reassure that language will not be a barrier.

The AI English proficiency scores are not included in the scoring or recommendation to advance or reject. They are information human reviewers must interpret and act on themselves. As always, human judgment remains in the loop. The AI’s language proficiency analysis does not make decisions; it provides data. A candidate would never be automatically rejected solely due to an AI-determined language score. Instead, the information is used in context.Recruiters can also share relevant portions of this analysis with candidates (for instance, if a candidate asks for feedback on their English, the recruiter could tell them the assessment of pronunciation or grammar). We find that having a clearer, more consistent measure of language ability can promote fairness when language is truly job-relevant: it bases the evaluation on linguistic performance rather than purely subjective perception, and it holds all candidates to the same linguistic criteria if applicable.

In summary, the English language proficiency feature of Hireguide’s AI Screener is intended to help ensure that when language skills matter for a role, they can be evaluated consistently and equitably. It complements the content-based scoring by adding another layer of potentially relevant information for decision-makers, while adhering to strong expectations of validation and fairness.

Ethical AI Practices and Compliance Measures

Hireguide is committed to using AI ethically and in alignment with applicable law throughout our recruitment platform. Our approach is informed by principles of fairness, transparency, privacy, and human accountability. We follow relevant regulations and strive to adopt best practices that support responsible use. Below, we outline how we uphold key ethical AI practices and align with legal frameworks:

Fairness and Bias Mitigation

Our AI evaluations focus strictly on job-relevant competencies and criteria defined by the employer. We do not evaluate or score candidates based on personal characteristics that are unrelated to the job or could introduce bias (e.g. race, gender, age, ethnicity, disability status). We also do not use accent as a negative factor in scoring; where language ability is evaluated for job-relevant reasons, the focus is on intelligibility and communication effectiveness rather than accent. The system’s design –asking uniform questions in a consistent manner and using the same rubric for every candidate – helps ensure everyone gets a comparable opportunity to demonstrate their qualifications.

We take several steps to mitigate bias:

Continuous Monitoring: We monitor the AI’s recommendations in real-world use for signs of disparate impact. If potential disparate patterns are detected, we investigate and, where appropriate, adjust system behavior, guidance, or configuration options to address issues.Hireguide’s team also performs periodic reviews of outcomes, and we provide tools for employers to conduct their own adverse impact analysis using the data from our platform. Bias mitigation is an ongoing process – not a one-time calibration.

Bias-Resistant Criteria Design: We work to ensure that the criteria employers define are as neutral and merit-based as possible. Our customer success and I/O psychology experts advise clients on crafting interview questions and rubrics that align with EEOC guidance and the UniformGuidelines on Employee Selection Procedures (i.e. being job-related and grounded in the role). The platform can flag if a user-defined question or criterion might be potentially sensitive or non job-related, prompting a second look. By combining technology and human expertise, we help users avoid inadvertently introducing bias through poorly chosen interview content.

Transparency to Candidates

We believe candidates have a right to know when AI is involved in their hiring process and how it impacts them. Our platform is transparent with applicants about AI use:

Upfront Disclosure and Consent:

As described in the Consent section above, candidates are informed before the interview about the use of AI, what it will do, and why it’s being used. We communicate the scope of the AI’s role and that a human will be making the final hiring decision.This disclosure is explicit and requires the candidate’s agreement, rather than being buried in fine print.

Plain Language Explanations:

All our candidate-facing language is crafted to be clear and non-technical. We avoid jargon when explaining AI involvement. For example, we say "an AI tool will transcribe and analyze your interview answers and provide recommendations to our hiring team"— instead of an opaque statement. A candidate should come away feeling informed, not confused.

Feedback and Explanation of Results:

We encourage transparency in outcomes as well. Our platform provides analysis and feedback in a structured way that can be shared with candidates if the hiring company chooses. For instance, if a candidate requests feedback or an explanation for why they weren’t advanced, the hiring team can provide details grounded in the AI’s evaluation – e.g. which job-related criteria the candidate’s answers met or did not meet, along with examples. Because our AI’s evaluations are traceable to specific answer content, it’s possible to give a meaningful explanation rather than a generic rejection.

Open Contact for Questions:

We also provide contact information or help channels in case the candidate has questions about the process or technology. Candidates are encouraged to reach out if they need clarification or have concerns, and we (or our client) will address those queries.

In short, there is no mystery for candidates about the AI’s presence or purpose. This level of transparency supports compliance with notice and consent requirements that may apply in some jurisdictions, and it can also build trust.Candidates often appreciate knowing that a consistent standard is being applied, especially when the process is clearly explained and human review remains central.

Privacy and Data Protection

We treat candidate data with care and respect. All candidate information – including video recordings, audio, transcripts, and the AI’s evaluation results – is handled with strict confidentiality and strong security controls. Hireguide follows data protection best practices and helps our clients support compliance with privacy laws such as GDPR (inEurope) and CCPA/CPRA (in California), as applicable:

Data Usage Limits:

Hireguide maintains a strong data usage DPA. Candidate interview data is used only for legitimate hiring purposes. We do not sell this data or use it to train unrelated models without permission. We also do not retain personal data longer than necessary. By default, we keep interview recordings and data only for a timeframe agreed with the client (typically through the duration of the hiring process or as required for record-keeping), and we support customized retention schedules.

Consent and Control:

As discussed, candidates provide explicit consent for the recording and AI analysis.

Limited Access and Sharing:

Interview videos and AI analysis are only shared with authorized personnel involved in the hiring process (e.g. the relevant recruiters and hiring managers at the company). We do not share candidate data with any third parties except as needed to provide our service – and those we do engage (such as a cloud storage provider or transcription service) are bound by privacy and security obligations. We do not share or disclose interview content to others outside the hiring process. Notably, some laws restrict sharing video interviews except with persons whose expertise is needed to evaluate the candidate – we support this by ensuring access can be restricted to the hiring team (and our platform’s processors) as configured by the employer.

Security Measures:

Data is encrypted in transit and at rest as part of our security design. We maintain access controls and monitoring to help ensure that only authorized users can access sensitive information. Our data infrastructure is designed to align with industry-leading security standards, and we may undergo third-party audits (e.g., SOC 2) as appropriate; relevant documentation can be shared underNDA where available. Regular security assessments and penetration tests may be conducted to help protect against breaches. In the unlikely event of a security incident, we have an incident response plan and will notify affected parties in line with legal requirements.

Our commitment to privacy is a cornerstone of ethical AI use. We recognize that candidates are entrusting us (and our clients) with sensitive information about themselves, and we take that responsibility seriously.

No Autonomous Decisions – Human Oversight Guaranteed

Hireguide’s AI is strictly an advisory tool; it never has the final say on hiring outcomes. We design both our technology and our policies to help ensure a human is accountable for every decision:

Human Makes the Decision:

The platform is designed so that a human user (recruiter or hiring manager) reviews the AI’s recommendations and makes the actual hiring decision (e.g. deciding who to move forward to the next round or who to reject). We remind users in the interface that theAI’s scores are advisory and not a final verdict.

Communicated to Candidates:

Our candidate consent form and user guidelines reiterate that any decision to hire or not hire is made by people, not by an algorithm. This is important for managing expectations and is also crucial for legal compliance in many regions that disallow purely automated hiring decisions without human intervention. It assures candidates that they are not being “hired by a robot” or rejected without human consideration.

No Auto-Rejection Mechanism:

We have safeguards so that AI outputs do not automatically execute a rejection or advancement decision. For example, even if the AI were to score every answer as “Does Not Meet,” the system would flag the candidate as not recommended to advance, but a person still must confirm the next step. This helps ensure that every candidate gets human consideration at the decision stage. Recruiters can choose to give someone a chance despite a low AI score, using their professional judgment or additional information not captured in the interview.

Escalation of Uncertainty:

If the AI ever encounters a scenario outside its rules (say, a candidate gives an answer that is highly unusual or the AI’s personas produce conflicting analyses), it will not attempt to handle it autonomously. Those cases are escalated for human review by default. The AI might identify the response as something it’s “not confident” about and explicitly mark it for the recruiter to evaluate manually.

Empowering Recruiter Override:

At any point, a user can override the AI’s feedback. Our interface makes it easy for recruiters to adjust scores, add their own notes, or disregard the AI’s recommendation entirely for a particular question or candidate. We encourage recruiters to use the AI as a second pair of eyes, not as an infallible judge. They are the hiring experts and have the full ability to apply their context and expertise.

By keeping a human “in the loop,” we provide a safety net: if the AI misinterprets something or has a blind spot, the human decision-maker can catch it and correct course. Ultimately, Hireguide’s AI augments human decision-making; it does not replace it.

Audibility and Accountability

We recognize that for an ethics committee (or regulator) to trust an AI system, it must be auditable and accountable. We have engineered the platform such that key actions and decisions can be traced and examined:

Comprehensive Logging:

Every question asked, every score given by the AI, and every action taken by a human user in the platform is recorded in a secure audit log. We capture details like timestamps, the identity of the user (for human actions), and the content of the AI’s recommendations. For example, if a recruiter overrides anAI rating, the log notes that intervention. If the AI marked an answer as incomplete and asked a follow-up question, the log captures that entire sequence. This provides a step-by-step history of the interview and evaluation process foreach candidate.

Traceable Decisions:

Because we log the AI’s rationale and evidence for each score (the criteria evaluations and snippets of the candidate’s answer that led to a conclusion),an auditor can retrace how a conclusion was reached. For instance, if an ethics committee asks “Why was CandidateX not moved to the next round?”, the hiring company can produce records from our system showing: the interview questions, the candidate’s responses (and transcript), theAI’s scores with explanations (including which required competencies were missing or present), and the human recruiter’s final decision note. This level of detail makes audits or investigations into potential bias/fairness issues more transparent.

Accountability:

The combination of logs and human oversight means accountability is clear. If a question arises about a hiring outcome, the company can determine whether the AI or a human judgment (or both) contributed to that outcome and examine the appropriateness. Our system tags each recommendation or action with its source (AI or a specific user), so there is no ambiguity in responsibility.

Comprehensive Logging:

Every question asked, every score given by the AI, and every action taken by a human user in the platform is recorded in a secure audit log. We capture details like timestamps, the identity of the user (for human actions), and the content of the AI’s recommendations. For example, if a recruiter overrides an AI rating, the log notes that intervention. If the AI marked an answer as incomplete and asked a follow-up question, the log captures that entire sequence. This provides a step-by-step history of the interview and evaluation process foreach candidate.

Traceable Decisions:

Because we log the AI’s rationale and evidence for each score (the criteria evaluations and snippets of the candidate’s answer that led to a conclusion),an auditor can retrace how a conclusion was reached. For instance, if an ethics committee asks “Why was CandidateX not moved to the next round?”, the hiring company can produce records from our system showing: the interview questions, the candidate’s responses (and transcript), the AI’s scores with explanations (including which required competencies were missing or present), and the human recruiter’s final decision note. This level of detail makes audits or investigations into potential bias/fairness issues more transparent.

Accountability:

The combination of logs and human oversight means accountability is clear. If a question arises about a hiring outcome, the company can determine whether the AI or a human judgment (or both) contributed to that outcome and examine the appropriateness. Our system tags each recommendation or action with its source (AI or a specific user), so there is no ambiguity in responsibility.

Ongoing Ethical Oversight and Improvement

Ethical AI use is an ongoing commitment. We maintain a cross-functional review process (spanning product, data/engineering, and legal/compliance) to evaluate material AI changes for privacy, fairness, and transparency considerations. This review process helps assess proposed changes (e.g. a new AI feature or a significant algorithm update) for potential bias, privacy, or fairness implications before implementation.

We also solicit feedback from our clients and, when possible, from candidates, to learn about their experiences and any concerns. If a client or user reports a potential issue (say, they suspect the AI may not be working fairly in a certain scenario), we investigate promptly and thoroughly.This feedback loop helps us catch real-world issues that our testing might not have anticipated.

Our aim is to comply with applicable requirements and align with best practices that those requirements seek to encourage. We view external reviews (such as this committee evaluation) as valuable opportunities to improve. Recommendations from auditors or new findings from academic research can be fed back into our development cycle.

Conclusion

In conclusion, Hireguide’s AI Screener Explainability Statement reflects our dedication to a hiring process that is transparent, fair, and under human control. We have articulated why we use AI (to enhance consistency, efficiency, and insights), how our AI is designed and operates (with user control, consent, multi-model checks, and rule-based logic), how candidates are evaluated and scored (with clear criteria, explanations, and human review),and what measures we take to support ethical use and legal compliance (fairness/bias mitigation, candidate rights, privacy protection, auditability, and oversight). We have also detailed our language and proficiency assessments, including their research-informed foundation and fairness safeguards, as these are important components of our AI Screener’s capabilities.